Cloud is a great enabler. The concept of a software defined infrastructure has been extremely instrumental in bringing new innovative products to the market. Companies small and large are realizing the benefits of not being hard wired to their hardware and procuring as much as they need, when they need it, on demand. Markets are volatile and unpredictable. Nobody is comfortable making decisions about an uncertain future. The best model that works in those cases is the one that is flexible. Cloud has given the elasticity that enables organizations to take calculated risks, experiment, innovate, and respond to market changes quickly without worrying too much on the ROI on their hardware investments.

Cloud computing and the S-Curve

Cloud computing is at a very interesting point in history. We are seeing some unique trends. Who knew managing data centers would be so interesting? Technologies powering the cloud are seeing rapid innovations driven by several factors: efficient utilization of data center space, security, decentralization and ease of taking an application from development to production. There is a lot happening in this space but there is still much to be done.

Ray Kurzweil in his book The Singularity is Near explains the technology evolution theory as follows:

Each technology develops in three stages:

- Slow growth (the early phase of exponential growth).

- Rapid growth (the late, explosive phase of exponential growth).

- A levelling off phase as a particular technology paradigm matures.

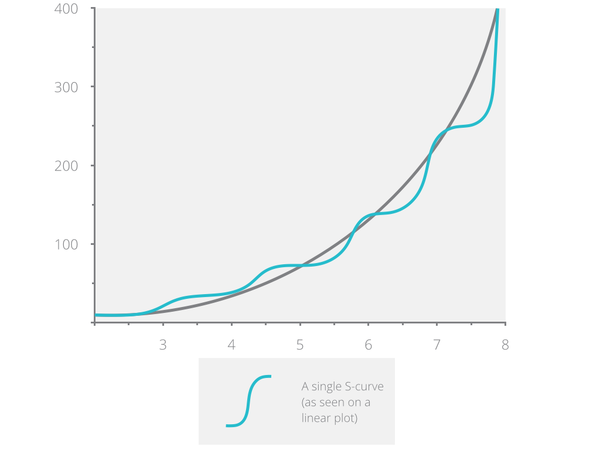

The progression of these three stages looks like the letter S. The S curve illustration above shows how an ongoing exponential trend can be composed of a cascade of S-curves. Each successive S-curve is faster owing to the learnings that feed forward from the previous S-curves.

>> “Full server virtualization is battle tested and mature, but it has some limitations.”

Cloud computing as a whole can be seen as a composition of several aspects: compute, networking and storage. There is a lot of momentum in network and storage e.g. Network Function Virtualization (NFV) and Software Defined Networking, respectively. For now, I would like to focus on compute.

Virtualization

The concept of virtualization is not new. It dates back all the way to the 1960s when IBM came up with a CP-40 time sharing system. The concept of cloud computing though, is relatively recent and has been continually pushing the limits of virtualization technologies. Hypervisor powered virtual machine virtualization sits on the levelling off (top right) part of the S-curve. There is a lot of maturity in this space and it is driven by a number of large public clouds e.g. Amazon and Rackspace. It is battle tested and mature, but it has some limitations. Booting up a guest as a complete virtual instance of an operating system means the whole kernel gets loaded with every boot. This has some disadvantages: slow boot times, wasted data center space and a large surface area for security attacks. The full Linux kernel is 16 million lines of code and has many vulnerabilities.

Containers

Containers are relatively new. Linux Container (LXC) first came out in 2008. LXC provides OS-level virtualization, meaning the kernel allows for isolated execution environments in the user space. After a slow start, containers are fast becoming mainstream and seeing rapid innovations. They are in the rapid exponential growth phase of the S-curve. Support for OS-level virtualization means that you don’t need to boot up a whole kernel for every virtual instance that you need. For an organization heavily operating in the cloud, this is a big win. It allows them to save a lot on space and still keep their architectures very simple. Single purpose VMs, though desirable, result in a lot of wastage. With containers, those problems go away. Google has been using containers in their data centers for a while, as full blown server virtualization is just too much for the scale that they operate. Docker has been grabbing a lot of attention with its technology, which makes containers really easy to use.

Microkernels

Now, lets talk about the far left of the S-curve. Containers still do not solve the security problem. If they share the same kernel space, they increase the surface attack area unless the kernels that they run on are minimal distros specially designed to run just containers. In the Docker world, there are a few operating systems like CoreOS and RancherOS, which claim to be minimal linux kernels whose only purpose is to run Docker containers. This is where it gets interesting.

>> “Cloud based businesses are exploding and with them the challenges around efficient utilization and security are growing.”

Microkernels and Library Operating systems have not gained much mainstream attention until now, but they seem to be the next logical step forward. Microkernels are ground up based on the concept of minimality. The general rule is that a microkernel’s size should not exceed 10,000 lines of code. Only the things that are absolutely required to make an operating system, go into the kernel (low level address space management, thread management and interprocess communication). Traditional operating system functions like file systems, networking, and device drivers run in the user space. Microkernels were hot in the 1980s, but never gained mainstream attention due to performance concerns.

Exokernels

Library Operating Systems are implementations of Exokernels. Exokernels are based on the principle of forcing minimum hardware abstractions on OS developers. Monolith kernels provide hardware abstractions through virtual file systems and microkernels are based on message passing. Exokernels are just concerned about protection and resource multiplexing. The specific implementation of any hardware service can be implemented as a library in any abstraction you want rather than being limited to the abstractions provided by the kernel (vfs, sockets, virtual memory etc). This means that you can build your applications with only the libraries that you want. Exokernels are tiny and very light weight. One such implementation that comes to mind is MirageOS. This started off as a research project at the University of Cambridge and is now a Xen Incubation project. MirageOS is a library operating system written in type safe OCaml. The main motivations for choosing OCaml, being it is very light and secure. Application code along with required hardware libraries is packaged together in a unikernel and executed directly on the Xen hypervisor. It aims to provide security by developing it in a safe language like OCaml and of course by keeping it very tiny to reduce the surface area.

The future is...

Microkernels and Library OSes have yet to get to the phase of rapid exponential growth in their evolution, but I am positive that it will happen soon. Cloud based businesses are exploding and with them challenges around efficient utilization and security are growing exponentially. In turn, so will the rate of innovations for these technologies. Efficient utilization not only means there will be virtualized servers taking up very little space. But also that they will take no time to boot up and shutdown. When these technologies start climbing up the S-curve rapidly, we will one day see an ephemeral machine that launches on every incoming request and vanishes as soon as its done. The Future is Dust Clouds!

Sign up to receive the latest edition of P2 Magazine.